Some types of cancer are often diagnosed after the disease has metastasized and spread to other parts of the body. It can be hard even for expert radiologists to spot an early-developing tumor from CT scans without extensive knowledge of the patient’s background, genetics and lifestyle. The difficulty of obtaining timely medical care around the world delays patients’ access to diagnosis and treatment, allowing malignancies to further spread.

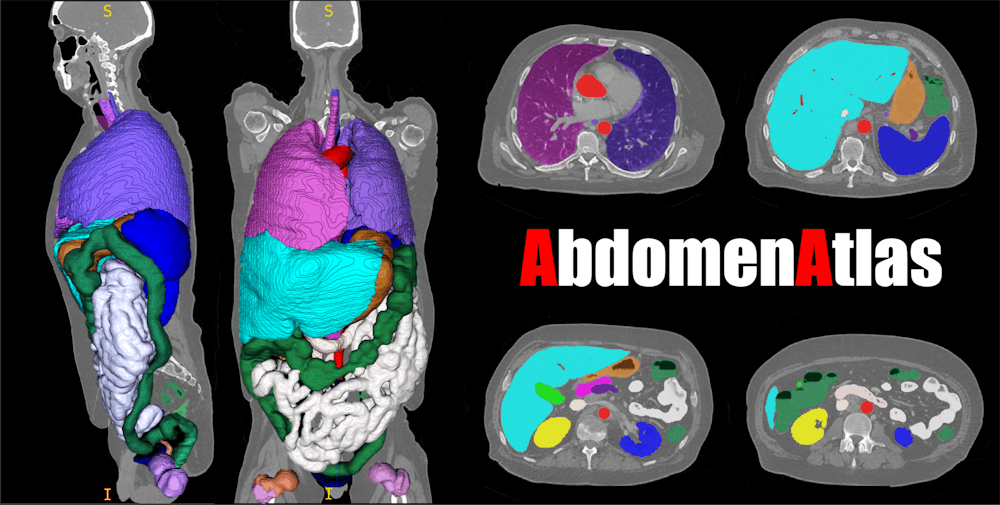

However, a recent paper published by Johns Hopkins researchers in the Department of Computer Science details the development and use of AbdomenAtlas: an annotated public dataset containing abdominal CT scans from over tens of thousands of patients around the world. This new extensive dataset serves as the training ground for early-detection artificial intelligence programs, which have the potential to greatly improve the efficiency of cancer diagnosis and treatment.

In an interview with The News-Letter, two core members of the team behind AbdomenAtlas, Zongwei Zhou and Wenxuan Li, explained their work and its applications in medical imaging and diagnosis.

“Our team’s ultimate goal is to create a very reliable AI to help radiologists detect diverse types of cancers,” Zhou explained. “At the current stage, we can detect cancer from the liver, pancreas and kidney, and looking forward, we want to detect all types of tumors at earlier stages, classify them, and predict how they will develop over time.”

Before AbdomenAtlas, a significant roadblock in AI-driven cancer detection came from the lack of adequate training data. To address this, AbdomenAtlas compiled and annotated 45,000 patient CT scans from patients around the world, including in the U.S., Asia and Europe. This marks a significant improvement from a previously compiled dataset, TotalSegmentator, which contained around 1,200 scans sourced from 1 data repository in Europe.

Additionally, AbdomenAtlas improves on previous models due to the diversity of anatomical structures it can detect and classify (in a process known as annotation); it can detect 142 different anatomical structures compared to TotalSegmentator’s 117 annotated classes. The annotation of cancerous tissues by AbdomenAtlas is crucial to identifying tumors in their early stages and AbdomenAtlas’s detailed annotation process includes precisely locating the boundaries of each anatomical structure, creating incredibly clear 3D maps of the abdomen for radiologist interpretation.

“We showed that the AI trained on our dataset can achieve very similar detection performance to humans, and we also showed that an AI trained on this dataset can classify cancers much better than humans,” Zhou said.

Zhou described that the AI was proficient in annotating around 95% of cases and the remaining 5% was much harder to annotate and necessitated a rigorous human review process. The team worked with a dozen expert radiologists from Hopkins and beyond who reviewed the accuracy of the AI’s low-confidence organ-boundary predictions, eventually resulting in the annotation of 45,000 CT scans.

In the short time since its initial release, AbdomenAtlas has already received significant updates improving its functionality and expanding its datasets. Version 2.0 improved the program’s ability to detect lesions and tumors in various organs, while Version 3.0 has introduced integrated narrative natural-language reports interpreting and describing tumor growth, organ size and tissue health. Additionally, the team’s dataset has also scaled up from 45,000 to around 80,000 patient abdominal CT scans.

As the new versions of AbdomenAtlas roll out, the team hopes to expand their work internationally.

“We want to integrate our AI into a web-based AI software that has similar performance levels of the average radiologist reported in the literature,” Zhou detailed. “The software can be used in countries and areas where there are not many expert radiologists.”

Through these efforts, Zhou looks to make expert medical imaging and diagnosis accessible for populations worldwide, improving their access to timely care and treatment. The researchers also carry high hopes for the future of AI-based medical image analysis, and Li views AI as a tool to annotate tissues quicker, accelerating treatment planning and increasing survival rates for patients.

“We are trying to help radiologists increase the speed of diagnosis and treatment,” Li explained. “AI can be involved as a ‘first reader’ for radiologists; the radiologist may not be able to see details just based on imaging, but the AI can identify potential problem areas and give the radiologist more information to work with.”

Zhou imagines a world where patients’ preliminary scans can be transformed into a digital map, making the treatment process more interactive and personalized.

“Now, we can digitalize the human body and simulate cancer response to surgery and chemotherapy. We can even develop this into AR/VR devices that clinicians can zoom in on, zoom out on, rotate and distort,” Zhou explained. “This is personalized and can improve the efficiency of doctor-patient communication.”