Andrew H. Song, a postdoctoral research fellow at Harvard Medical School, presented his work on applying deep learning tools to cancer pathology at a talk titled “Taming Large-Scale Pathology Data for Clinical Outcome Prediction” on Nov. 13. In his talk, Song delved into his efforts to leverage AI in improving cancer diagnostics, explaining how machine learning models can fundamentally alter the landscape of clinical outcome prediction.

Song opened with a video showing a biopsy tissue sample under a microscope, demonstrating the rich information available in these samples that can now be interpreted through machine learning.

“When a patient is suspected of cancer or another disease, we go in and pick up a portion from the tissue organ,” Song explained. “Since much pathology work is 2D, we cut the sample into thin slices, place them on glass slides and examine them under a microscope or scan them to create a whole slide image. As you zoom in, individual purple sparkles appear, and a pathologist can distinguish cancer cells from normal cells based on the shape of each cell and the way cells cluster or spread throughout the tissue.”

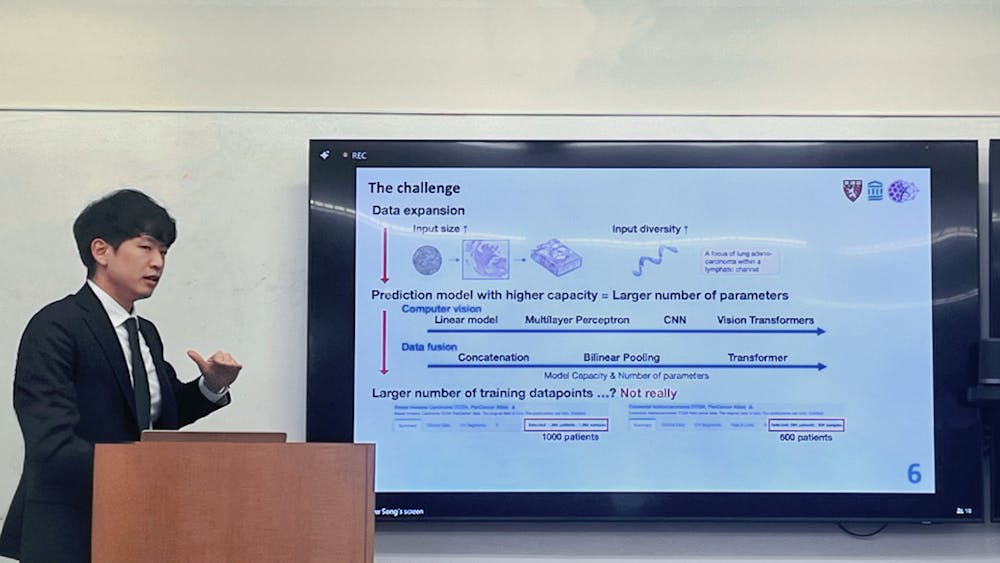

The digital imaging landscape has grown enormously from the days of one millimeter core samples on tissue microarrays (TMAs) to whole slide image (WSI) that demand five gigabytes GB of storage per sample.

“Looking forward, there’s an even larger data layer: the whole block image, which is a 3D view of the tissue,” Song said. “This volumetric data better reflects the complexity of human tissue and poses additional challenges for computation and storage.”

Song’s research focuses on utilizing patient clinical data at a comprehensive level, including proteomics, genomics, spatial transcriptomics, as well as immunohistochemistry and bulk RNA sequencing data. This “big data” approach allows researchers to build holistic models of patient health.

One key application of AI is to streamline traditional pathology tasks, such as cancer subtyping and grading.

Song explained that subtyping involves classifying tissue into specific cancer subtypes, while grading assesses the severity of the cancer. He notes that although pathologist perform these tasks, variability can impact their accuracy. He added that AI can streamline these processes, reducing variability and improving efficiency.

The second AI application is in predicting clinical outcomes for patients whose prognosis is uncertain.

“For instance, within a cancer cohort, we want to identify patients with a high risk of developing more severe forms of cancer versus low risk who do not need urgent treatment for certain treatment responses; we also want to identify the patients who will respond well to certain treatments or drugs,” he said. “AI, in these cases, will not only be able to identify the low risk versus high risk cohorts, but also find out common biomarkers or patterns underlying each of the groups.”

The third application — integrating data from multiple modalities — is an area for which no clinical guidelines exist, leaving AI as a valuable tool in extracting the complementary information necessary for predicting patient outcomes.

To manage the overwhelming scale of data, Song developed a standardized data pipeline for predictive modeling. His approach involves dividing each WSI into thousands of 256 x 256 pixel patches, each reduced to a low-dimensional representation using a “patch encoder.” The next step involves a “slide encoder” that aggregates all patch embeddings into a single, patient-level representation. This process compresses the data without sacrificing diagnostic accuracy.

The PANTHER framework — a morphological prototype-based compression approach Song developed during his doctoral researcher — further optimizes this process by reducing the number of patches per WSI. The paper was recently published in CVPR.

“At the end of the day, we need to aggregate everything from 10,000 patch embeddings into one. The question is, do we need all of them, or is a subset of them sufficient for downstream tasks? Or simply, can we do some kind of dimensionality reduction on the whole site image and still get good performance? The answer is yes,” Song noted.

Using Gaussian mixture models, PANTHER identifies repeating morphological patterns within a tissue sample and compresses them into representative prototypes, making the data more interpretable for clinicians.

“Once you have this kind of model, the good thing about this is not only improved performance, but also better interpretability clinically, because everything is explained based on basic morphological concepts,” Song said.

Song’s collaboration with Dr. Alexander Baras from the Johns Hopkins School of Medicine led to the creation of MultiModal PANTHER, an extension from PANTHER that integrates both histology and genomics. This fusion model compresses genomic data through gene pathways or functional gene sets, providing a streamlined, powerful approach to survival prediction, recently published in ICML.

“The gene expression that you typically get from bulk sequencing is also quite large, and each element doesn’t really mean much,“ he explained. “we compressed it further so that we only have a certain functional set of genes that has certain functions.”

In recent work, Song utilized self-supervised learning to optimize slide encoders without requiring large labeled datasets. By masking parts of an image and tasking the model with reconstructing it, Song’s team teaches the network to recognize key image features independently. In a recent project, Song developed a general-purpose slide encoder, based on a vision transformer with 42 million parameters, that performs well across multiple classification tasks without fine-tuning. This vision-language model showed promising accuracy, outperforming recent frameworks published in Nature.

The final part of Song’s talk was dedicated to his vision of transitioning from 2D to 3D pathology, a shift motivated by the complexity of human tissue and the loss of information when reducing 3D data to 2D slices.

According to Song, advances in imaging hardware have made 3D pathology possible, but software for analyzing this data remains underdeveloped. Song’s recent work, published in Cell, presents a framework optimized for 3D data, designed to process volumetric images for improved diagnostic accuracy.

“As the amount of available data explodes, we need certain software that can really analyze this... The concept is as follows, you have a large image that you are going to divide into smaller 3D chunks, and then you take each 3D chunk, pass them through 3D patch encoders and combine them with block encoders… the pipeline is similar to 2D counterparts, but each of the steps is optimized for 3D processing,” Song remarked.

Song discussed how this model has improved the prognosis of prostate cancer by allowing for a detailed morphological analysis of whole tissue volumes rather than individual slices. His team used different imaging techniques to ensure the framework’s robustness across modalities, an approach that yielded stronger prognostic performance as the portion of volume analyzed increased.

Song demonstrated the model’s interpretability with 3D heatmaps, showing that it could identify areas of morphological significance associated with cancer risk.

“The encouraging part was we didn’t tell the network a priori which kind of morphology was important. It was able to identify this 3D region by just looking at the data,” Song remarked. “Now that we have access to the whole volume rather than to the tissue slices, we do achieve better prognosis because we have more information from the patient; and secondly, since we have access to the whole volume, we can utilize 3d morphology to improve our results.”

Song’s ultimate goal is the creation of universal patient representations through digital twins. His talk underscored the role of AI in pathology, where innovative compression techniques, multimodal integration and adaptive learning methods continue to drive toward more accurate, patient-specific predictions in cancer treatment.