In the midst of the COVID-19 pandemic, there was increased hesitancy about vaccine effectiveness. Social media powered anti-vaccination campaigns and dangerous misinformation. One form of misinformation is deep fakes, which are becoming more prevalent and pose serious concerns for healthcare. Deep fakes are digitally altered videos or images that can use artificial intelligence to manipulate the words of popular figures, such as politicians or health experts, and can exacerbate global health issues like epidemics and pandemics.

COVID-19 was the third leading cause of death in America in 2021. Even when vaccines were readily available, many refused to take them because of misinformation. This is the age where most current events knowledge is obtained digitally, such as through videos or audio files; COVID-19 unfortunately solidified this trend. As people took to online learning services or Zoom learning due to lockdowns, online sources became the go-to place for validating information.

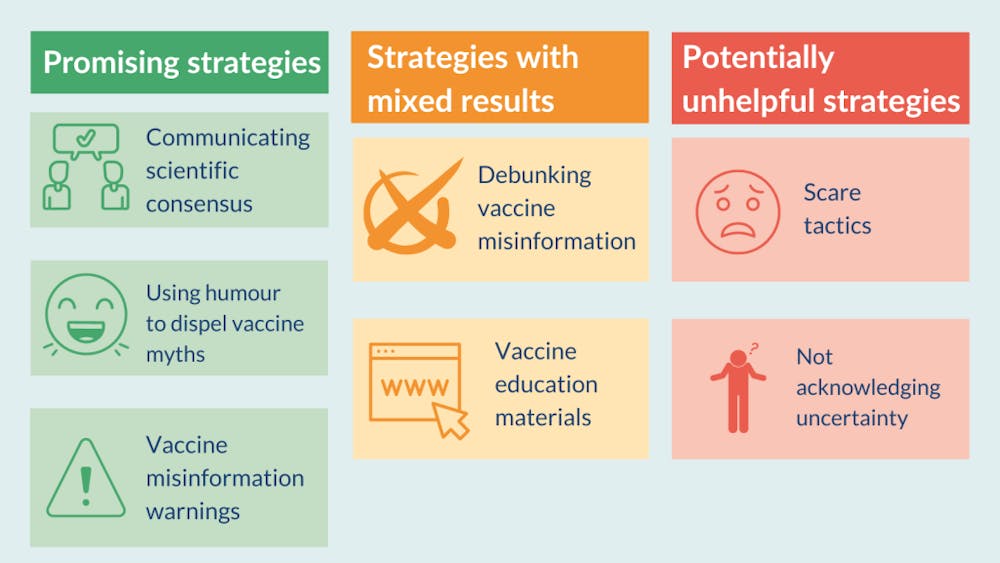

Scientific experts can release new updates in vaccine development on social media platforms in real time. Experts can also track misinformation written on online platforms in an effort to debunk them. However, platforms such as Twitter or Instagram have very limited regulation algorithms to eliminate AI misinformation. Due to Section 230 of the Communications Decency Act, social media companies are not held liable for misinformation spread on their platforms. In other words, these companies cannot be sued. The First Amendment protects some aspects of deep fakes, as they are indeed a form of free speech, and a total ban violates the Constitution.

According to a survey of 19,060 American adults, one in five are unsure of believing scientist claims about vaccines and a third who believe coronavirus misinformation are aware that they are in disagreement with scientific experts. Gen Z in particular have developed an overreliance on social media, and tend to believe other users as opposed to reliable experts. The elderly and youths are most likely to fall prey to misinformation. Specifically, the individuals that are already in doubt are likely to believe misinformation because of confirmation bias.

Deep fakes use existing content on media platforms and replace it by superimposing new content in an effort to make the new, uncredible content look real. As a result, it is becoming virtually impossible to discern lies and truth from seemingly “credible” sources. Social media circulates information, and repeated information is likely to be more believed than unrepeated information even if it is not credible. Repeated exposure to deep fakes can create mistrust in science and information writ large, as truth becomes elusive.

Mistrust in public institutions and information can make public health concerns more dangerous. Users could become entirely vulnerable to pandemics if they do not know what to believe, reducing the importance of government protocols or regulations or socially acceptable methods of staying safe. Even healthcare providers felt an increased level of anxiety during the pandemic due to conspiracy theories and overall distrust, which could affect their efficacy in hospital environments.

It has been established that social media is pivotal for spreading information, and if regulated properly, it can be resourceful. Methods of eradicating misinformation should start by protecting the online information’s metadata. A company called Digimarc released a patent on digital watermarks, which would be embedded into video or audio files. The watermarks would contain segment identifiers that are numerically based for each piece of content, including a face or pitch of a voice. When a hacker intends to switch the face or audio of the content, the swapped pieces will lack the specific hash in the watermark, signaling that a deep fake has been made. Algorithms would be able to detect if metadata of a video or image is tampered with and can erase that content from media platforms.

Another avenue for deep fake detection is through blockchain. Blockchain is a transparent data network where users can publicly post data information immediately. Data is stored in “blocks” and blocks can combine to form chains that are difficult to hack into and can be accessible by anyone included in the network. If metadata is made more public, hackers will find issues in manipulating data with original, accurate copies that are embedded into blockchain.

Additionally, the US government should focus on reforming the aforementioned Section 230 of the Communications Decency Act so media companies will have a monetary incentive to develop more stringent regulations. Reforms should still prioritize free speech to an extent while attempting to make the information more accurate.

In the digital age, deep fakes can have catastrophic consequences on democracy. Government elections, trust in institutions like the CDC or the FDA, news reporting, and overall public safety are all at risk due to the general public’s vulnerability. It is imperative that deep fakes are brought down before it is too late.