Technology has had a significant impact on the field of health care, improving imaging abilities and helping physicians diagnose patients accurately and efficiently. As the role of technology in the medical field has increased, so has concern expressed by those who fear a science-fiction-esque crisis.

These fears may not be unfounded, according to a team of researchers from Harvard and the Massachusetts Institute of Technology (MIT).

Artificial intelligence (AI) is intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans and other animals. It is often programmed to mimic the decision-making processes of humans without errors or bias. However, since they are designed and programmed by humans, they are subject to human manipulation.

In health care, this could become a problem, according to a new study.

Last year the Food and Drug Administration (FDA) approved a device that can capture an image of your retina and automatically detect signs of diabetic blindness. Artificial intelligence technology like this is rapidly spreading across the medical field and could help a greater portion of the population receive care at lower prices. Some of these technologies even make diagnoses without physician intervention, which could potentially serve millions in developing countries and rural areas.

AI will also likely be moving into other areas of the health-care field, including the computer systems used by hospital billing departments and insurance agencies. In the near future, AI will be determining the health of your body, as well as reimbursement payments and fees for insurance providers.

A recently published study in the journal Science highlights the possibility of “adversarial attacks,” which are defined by the researchers as manipulations of digital data that can change the behavior of AI. The alteration of a few tiny pixels on a diagnostic image could, for example, cause an AI system to identify a non-existent illness or miss one that is present.

This has serious implications for the multi-billion dollar industry of health care.

Samuel Finlayson, a researcher at Harvard Medical School and MIT and one of the authors of the paper, warned that some doctors and medical organizations are already manipulating data in order to increase their profit. Doctors have been found to subtly change billing codes: for example, describing a simple test like an X-ray as a more complex procedure in an effort to increase payouts from insurance companies.

Finlayson and his colleagues argue that AI could be another target for this type of manipulation.

An adversarial attack takes advantage of the way in which many AI systems operate.

Today, AI is gradually becoming driven by neural networks, which are complex quantitative systems that learn tasks by analyzing significant amounts of data. If this “machine learning” is manipulated, it can produce unexpected results. Finlayson and his colleagues have expressed concern that as regulators, insurance providers and billing companies begin implementing AI software systems, businesses can learn how to play the underlying algorithms for their own benefit.

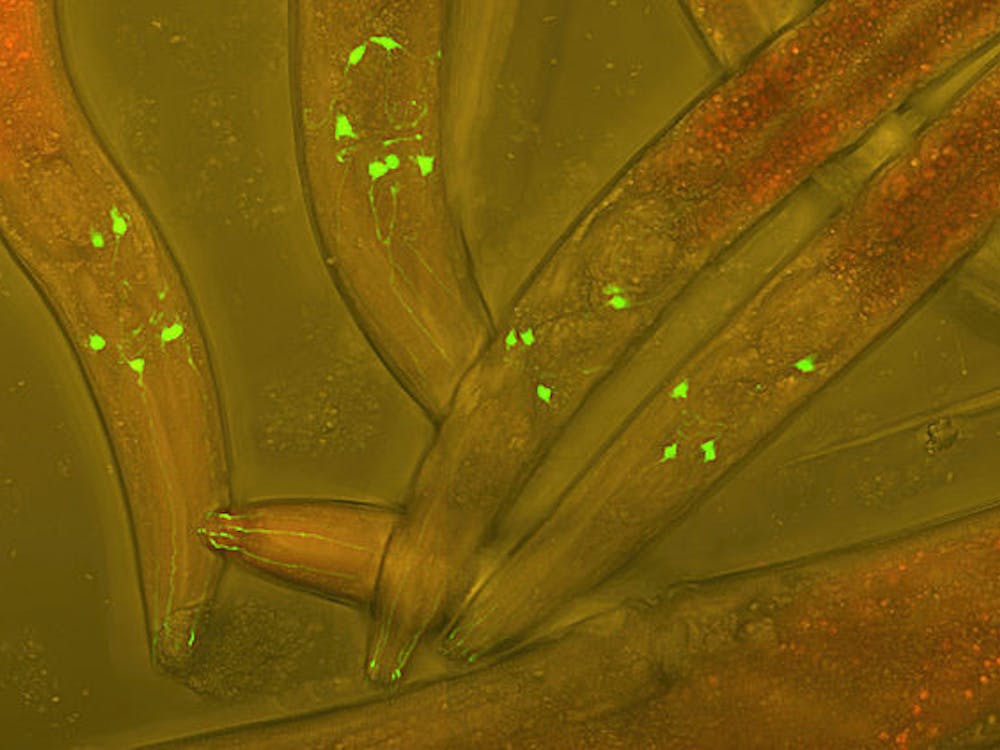

In their paper, the researchers demonstrated that, by making small changes to input data, AI systems could be fooled into making incorrect diagnoses.

The team specifically manipulated a small number of pixels in an image of a benign skin lesion and found that the diagnostic AI system identified the lesion as malignant.

They also observed that minor changes to written descriptions of a condition could alter an AI diagnosis. For example, “alcohol abuse” could produce a different diagnosis than “alcohol dependence,” highlighting the nuances present in AI diagnostic technology.

This effect on diagnoses could directly change the profit of organizations like insurance providers and hospital billing departments.

The manipulation of AI systems could seriously harm patients, Finlayson said to the New York Times. Deliberate changes made to medical scans or other patient data in order to fit AI requirements used by insurance companies could end up on a patient’s permanent record and alter future decisions regarding medical care.

Kale Hyder, a freshman majoring in Neuroscience on the pre-medical track, expressed concern over the potential abuse of AI technology by physicians.

“I never thought about [health-care agents] increasing their profits by falsifying the readings,” Hyder said in an interview with The News-Letter.

Hyder further explained how he believes technology has been positive for health care but also stressed the importance of human interaction in care.

“I see technology as definitely advancing health care because it allows physicians and health-care professionals to automate certain tasks and become more efficient and productive,” Hyder said. “I also think it’s really meaningful to have that connection with a physician, and I don’t think a computer or a robot would be able to do that justice.”

When questioned on the potential for manipulation of AI in order to boost profit, Hyder stressed the importance of regulation and for agents to conduct themselves ethically.

“Ultimately, it is just going to have to be people putting the patient first... In most cases this will probably happen, but hopefully regulation keeps [abuse] from happening,” Hyder said.

As a student on the pre-medical track, Hyder expressed hope for the future of health care.

“I hope that the physician-patient interaction where physicians are entering the room and having those meaningful conversations and really working through a treatment plan with their patients, stays intact for as long as there are physicians in health care,” Hyder said.